Site speed is one of the most important things about creating web content, and web applications.

In fact, it has been noted by various analysts at Google that people don’t often sit through the first 30 seconds of a video, much less the first 15, so it would be wise of you to get the content of your site loaded as fast as you can so that people can make a judgement and agree to either use it or not.

It may be a bit superficial that people judge sites that fast, but it is often the case, and we shouldn't take it for granted.

Optimizing your site should be a top priority, and often times when using WordPress and other engines they have nice plugins that help. I will assume though, that you may not be using WordPress, because there are a lot that don't, and I will give you a few of the best ways to optimize any site regardless of where its hosted.

Site speed is one of the most important things about creating web content, and web applications.

In fact, it has been noted by various analysts at Google that people don’t often sit through the first 30 seconds of a video, much less the first 15, so it would be wise of you to get the content of your site loaded as fast as you can so that people can make a judgement and agree to either use it or not.

It may be a bit superficial that people judge sites that fast, but it is often the case, and we shouldn't take it for granted.

Optimizing your site should be a top priority, and often times when using WordPress and other engines they have nice plugins that help. I will assume though, that you may not be using WordPress, because there are a lot that don't, and I will give you a few of the best ways to optimize any site regardless of where its hosted.

Images

Image optimization can be a tough topic, but one that actually has a lot of aspects from which to choose. There are file formats, image optimization tools, and code/CSS best practices to follow to make sure you are saving and acting with images in the best way possible.

I want to give an example as to why this is important though, so let's take a recent example. It has recently become apparent to various iOS developers and app creators that apps that use the Retina ready images are taking up 2–4 times as much space on the person's phone than their previous versions, and it is causing people's phones to simply run out of space from simple app downloads.

This isn’t as relevant to us web developers and designers, but it does go to show you just how important it is to properly handle your images on any platform. The following are a few of what I think are the most important topics to remember when optimizing images for the web.

Image optimization can be a tough topic, but one that actually has a lot of aspects from which to choose. There are file formats, image optimization tools, and code/CSS best practices to follow to make sure you are saving and acting with images in the best way possible.

I want to give an example as to why this is important though, so let's take a recent example. It has recently become apparent to various iOS developers and app creators that apps that use the Retina ready images are taking up 2–4 times as much space on the person's phone than their previous versions, and it is causing people's phones to simply run out of space from simple app downloads.

This isn’t as relevant to us web developers and designers, but it does go to show you just how important it is to properly handle your images on any platform. The following are a few of what I think are the most important topics to remember when optimizing images for the web.

Image formats

The formatting of images is a heated topic, and it seems to be because everyone believes a different format will increase speed, but there is a pretty prevalent school of thought on this, and we can always use this as a de-facto standard. JPEG's are for photographs, GIF's are for low color images/flat color images, and PNG's are for everything else. Most web designers and developers that I know of prefer to use PNG's for just about everything, unless they have a button perhaps that has one or two colors, whereby they find GIF's to work great. Now, of course you can play with those specifications but always remember that these are standards for what will save smaller and lighter vs bigger and heavier. If you are doing a photography site though, it will be loading pretty slow regardless compared to other sites — so try out some of these next methods to increase the image optimization overall.Image code

One of the worst things we can do for server time when loading images is let the code do the sizing for us. Well, that could be said for anything regarding 'letting the code do ____ for us'. The common saying is, "If you can do it, then do it", and it is a darn good one. Using things likewidth='50px' height='30px' can really throw off the server load time as far as that image is concerned, because the server is parsing the page and sees there is a task it has to accomplish - one that could have been done by the creator. So make sure you go ahead and do that with all of your images.

Image optimization tools

Tools are always helpful. Well, most of the time. Sometimes they are a burden and a distraction, but in this case it seems that they are often very useful. If you can find a great image optimization tool, first of all — link it in the comments because we are all on the hunt, but a few of my favorites are following. I love ImageOptim for Mac, and Riot for Windows. These two tools are very different, but perform a similar task. You can put images in and it will decipher a way and method to optimize them, do so, and then spit back out the final result all the while saving the format you sent them in with. They are really quite nice, and there are tons more out there. In fact, there are a bunch that will analyze an image's bitmap and tell you what format is best. You can easily tell that these are some of the most useful things in a web designers tool bucket other than a text-editor and design program, and rightfully so.Image based server optimization

I'm not an expert when it comes to setting up servers, but I certainly have enough background on the small scale to give this advice. Don't have massive image loads stored locally. That is, don't leave a database of images stored on your servers that you are serving the other site files from. Take note of the technologies such as Amazon S3 or Flickr's servers, and use those to serve your files from. I've recently implemented an Amazon S3 bucket to server our files from, and it was actually quite easy — so feel free to try that. It is a great method. The main reason is that you don't want a database bottleneck to happen in an instance that you are serving multiple loads from, because it can be a diagnosing nightmare. It's good practice to store separate file's on different servers (if under massive load) unless of course it is just a simple general purpose string storage database or something similar.CSS and JavaScript Optimization

CSS and JavaScript are really important languages when it comes to web design, and especially when it comes to creating dynamic content. I think that people often forget that they can optimize their dynamic content, and they forget that they can optimize their JavaScript and CSS. These aren't really the most significant things for smaller sites, but with larger sites it's really important — especially when it comes to sites that rely on a lot of design. Let's step through a few of the "CSS and JavaScript Rules" that are pretty standardized when it comes to creating web applications.

CSS and JavaScript are really important languages when it comes to web design, and especially when it comes to creating dynamic content. I think that people often forget that they can optimize their dynamic content, and they forget that they can optimize their JavaScript and CSS. These aren't really the most significant things for smaller sites, but with larger sites it's really important — especially when it comes to sites that rely on a lot of design. Let's step through a few of the "CSS and JavaScript Rules" that are pretty standardized when it comes to creating web applications.

First rule of CSS and JavaScript

If you can do it in CSS, then do it Often times we forget that we have amazing tools right in front of us, and I'd say CSS classifies as one of the most amazing web designers have. I'd also say that designers jump into photoshop too quickly by nature (but it is their job so who can blame them). Do keep in mind though that as you design you have something in your browser that can do quick mock ups too: CSS3. Take advantage of it! Having a place to do quick mockups really helps, and it will lead you away from doing hacked together things in HTML later on. Instead of " " I am sure you can find a way to add that space in CSS, so do it!Second rule of CSS and JavaScript

Minify, minify, minify! The minification of code is perhaps one of the best and easiest things you can do to speed up your site. Keep in mind, we are talking miliseconds, but still it has a noticible effect — and especially if you are using something like a jQuery library. Remember that if you are ever adding plugins for JavaScript/CSS and you are given the option to download the minified version (and don't need to edit it), do so. Some of my favorite tools to do this are, Code Minifier for Mac, Minify for Windows, and JSCompress/CSSCompressor for those of you who want some browser-based cross platform solutions. Happy minifying!Third rule of CSS and JavaScript

In-line is a no-no It is bad practice to use in-line CSS or JavaScript, but especially when it comes to CSS. The reason for that is not only due to legacy issues, but also because if we leave the CSS within the HTML code (especially in-line) it will read as such: HTML/CSS/HTML/CSS/HTML/CSS/HTML/CSS instead of just a simple HTML => CSS. As you can tell, this is really bad for server load times, and can often lead to the detriment of most web applications should there be a designer who refused to use it in a separate file. It certainly wouldn't cause your site to crash, but it will cause another employee to go through and extract it — it is that important. So always remember to be the one who is extracting it, not the guy who leaves it for others to extract.Fourth rule of CSS and JavaScript

Move it down If you have to put your JavaScript in the page with the HTML itself, and have no way around it, then put it at the bottom of the HTML document. This helps speed up the site load time as well, because we can perform all of those functions and other JavaScript goodies after the page itself has loaded. Another thing is that this decreases the likelihood of a bug squashing the performance of the entire site, because when there is a bug with the JavaScript in a sight it will often eat memory like no tomorrow. So it is good practice to make sure your site isn't doing that, and to warn against future events in which it may — none of us want people to visit our site and then have their browsers crash.Fifth rule of CSS and Javascript

DOM optimization Reduce the DOM if you can. Take for instance an example that you are using a lot of jQuery that points to various DOM elements or reads through all the DOM to find something — it can slow your site down quite a bit. There is a little saying I always loved and it fits here, "If you are doing things because it is the only way you know how, then there are probably better ways to do it." You could also say, "If you are doing things because it is the only way you know how, then you are doing it wrong," but that version is a bit harsher. Research, and find those things out in such a case. If you are working with a div in HTML just because you need it for one little thing and it is the only way you know how to do that then it may not be the best way. Now, of course I understand that using div tags because you need them for your CSS is entirely understandable, but perhaps you can remove a few and find a more broad manner of handling that style issue. I just recently did this myself, as I am going through a Ruby on Rails project currently. Earlier in the week I nested roughly 5 div’s within each other in HAML of all things, just to do something I wanted (a box in a box in a box inside of something else in this case). And I just looked at it, knew it was crap, but didn’t know a better way to do it, so I scrapped it all to re-do it. Having to re-do that made it much harder but it forced me to learn a new way to handle that issue. And in the end I learned a lot from it, and I would recommend the solution to anyone in the future. Go ahead and grab one of those knowledge nuggets for yourself! They are certainly low hanging fruit.General optimizations

These are more of the broad topics that really don't fit in anywhere else, but that I still feel deserve some attention. In fact, some of these may be the most important things you can do to speed up a web application or site.Slashes on links

This is noticeably important. When a user opens a link without a slash at the very end from a website the server literally has to figure out what kind of file or page is at that address. The server will then include said slash, but if you add it yourself then you are reducing milliseconds of load time. These milliseconds all do add up, I promise. Often times I find designers especially who don't think about it think that their unoptimized code will not burden anything, but it does in the end. If you save quarters for 10 years you certainly will have a lot of money, and the same concept applies here — just on a smaller or larger scale depending on your site's traffic.Favicons

Browsers always do a pull for a favicon.ico file at the root level of your server, so you may as well just go ahead and include it. Even if it is something temporary, it is always good to have. If you don't, the browser itself will give an 'internal 404', and just cache that 404 up on the browser's favicon.ico section, and we all know reducing 404's speed up load time.Reduce cookie size

This one may not apply to all of us, but if you are developing web applications then reducing the cookie size is really important. For instance, in what I am familiar with — Ruby on Rails applications — you can use cookies (or other methods) for authentication from session to session and often times people will prefer to use the other methods because they can decrease user load times with them. Now, a cookie does imply that it is caching things on your computer so you may think it would increase load time, but typically all they are good for is authenticating user sessions or tracking you around the web (as Google and Facebook have been accused of). If you have to work with Cookies, though, make sure you keep the size low and you use them with your better judgement. If you have to, set the expire date shorter to decrease the load time.Cache

This is a massive topic, and one that I am not an expert on. Caching though is a pretty simple concept. It is storing files (typically HTML/CSS code) from sites that you frequently visit on your computer so that you don't have to load them every time you visit.

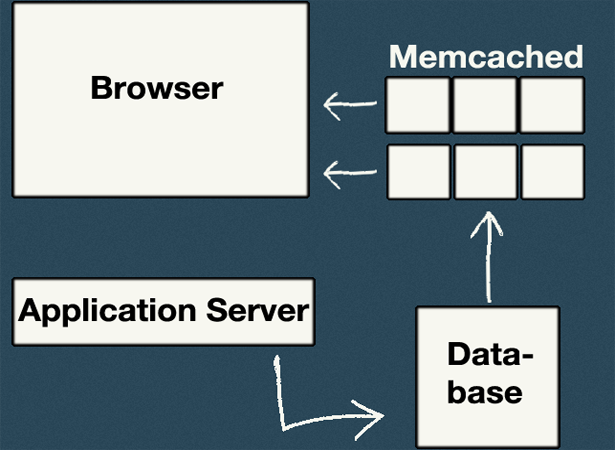

It is really an incredibly useful technology, and one that a lot of web applications are starting to employ as of the past few years. There have been a number of database solutions for caching and probably the most notable is Memcached. What this does is store a copy of database files to your browser as you are using a web application. So, for instance, if you have various profiles you visit often it may store the profile pictures to your computer, and the beauty of Memcached comes in the next phase. In your code, you can actually call (before you pull from the DB) from the Memcached servers and see if you can pull a cached version of the file(s). And if not it will, of course, pull the file from the Database, and if it isn't in the cache already it will add it to save time next time. This is a beautiful example of caching on a large scale and it has helped tons and tons of companies speed up servers and databases throughout the past 2+ years.

And that will just about sum it up. Those aren't all of the ways to speed up your site, of course, but it should start to peak your curiosity and get you looking for all the great things out there that will.

This is a massive topic, and one that I am not an expert on. Caching though is a pretty simple concept. It is storing files (typically HTML/CSS code) from sites that you frequently visit on your computer so that you don't have to load them every time you visit.

It is really an incredibly useful technology, and one that a lot of web applications are starting to employ as of the past few years. There have been a number of database solutions for caching and probably the most notable is Memcached. What this does is store a copy of database files to your browser as you are using a web application. So, for instance, if you have various profiles you visit often it may store the profile pictures to your computer, and the beauty of Memcached comes in the next phase. In your code, you can actually call (before you pull from the DB) from the Memcached servers and see if you can pull a cached version of the file(s). And if not it will, of course, pull the file from the Database, and if it isn't in the cache already it will add it to save time next time. This is a beautiful example of caching on a large scale and it has helped tons and tons of companies speed up servers and databases throughout the past 2+ years.

And that will just about sum it up. Those aren't all of the ways to speed up your site, of course, but it should start to peak your curiosity and get you looking for all the great things out there that will.

Dain Miller

Dain Miller is a former Presidential Innovation Fellow at The White House, and mentor for developers at starthere.fm. He now works to lead engineering teams at a distributed media company. You can find him on Twitter @dainmiller or at his website

Read Next

3 Essential Design Trends, May 2024

Integrated navigation elements, interactive typography, and digital overprints are three website design trends making…

How to Write World-Beating Web Content

Writing for the web is different from all other formats. We typically do not read to any real depth on the web; we…

By Louise North

20 Best New Websites, April 2024

Welcome to our sites of the month for April. With some websites, the details make all the difference, while in others,…

Exciting New Tools for Designers, April 2024

Welcome to our April tools collection. There are no practical jokes here, just practical gadgets, services, and apps to…

How Web Designers Can Stay Relevant in the Age of AI

The digital landscape is evolving rapidly. With the advent of AI, every sector is witnessing a revolution, including…

By Louise North

14 Top UX Tools for Designers in 2024

User Experience (UX) is one of the most important fields of design, so it should come as no surprise that there are a…

By Simon Sterne

What Negative Effects Does a Bad Website Design Have On My Business?

Consumer expectations for a responsive, immersive, and visually appealing website experience have never been higher. In…

10+ Best Resources & Tools for Web Designers (2024 update)

Is searching for the best web design tools to suit your needs akin to having a recurring bad dream? Does each…

By WDD Staff

3 Essential Design Trends, April 2024

Ready to jump into some amazing new design ideas for Spring? Our roundup has everything from UX to color trends…

How to Plan Your First Successful Website

Planning a new website can be exciting and — if you’re anything like me — a little daunting. Whether you’re an…

By Simon Sterne

15 Best New Fonts, March 2024

Welcome to March’s edition of our roundup of the best new fonts for designers. This month’s compilation includes…

By Ben Moss

LimeWire Developer APIs Herald a New Era of AI Integration

Generative AI is a fascinating technology. Far from the design killer some people feared, it is an empowering and…

By WDD Staff