When Google and other search engines index websites, they don't execute JavaScript. This seems to put single page sites — many of which rely on JavaScript — at a tremendous disadvantage compared to a traditional website.

When Google and other search engines index websites, they don't execute JavaScript. This seems to put single page sites — many of which rely on JavaScript — at a tremendous disadvantage compared to a traditional website.

Not being on Google, could easily mean the death of a business, and this daunting pitfall could tempt the uninformed to abandon single page sites altogether.

However, single page sites actually have an advantage over traditional websites in search engine optimization (SEO) because Google and others have recognized the challenge. They have created a mechanism for single page sites to not only have their dynamic pages indexed, but also optimize their pages specifically for crawlers.

In this article we'll focus on Google, but other large search engines such as Yahoo! and Bing support the same mechanism.

How Google crawls a single page site

When Google indexes a traditional website, its web crawler (called a Googlebot) first scans and indexes the content of the top-level URI (for example, www.myhome.com). Once this is complete, it then it follows all of the links on that page and indexes those pages as well. It then follows the links on the subsequent pages, and so on. Eventually it indexes all the content on the site and associated domains.

When the Googlebot tries to index a single page site, all it sees in the HTML is a single empty container (usually an empty div or body tag), so there's nothing to index and no links to crawl, and it indexes the site accordingly (in the round circular "folder" on the floor next to its desk).

If that were the end of the story, it would be the end of single page sites for many web applications and sites. Fortunately, Google and other search engines have recognized the importance of single page sites and provided tools to allow developers to provide search information to the crawler that can be better than traditional websites.

How to make a single page site crawlable

The first key to making our single page site crawlable is to realize that our server can tell if a request is being made by a crawler or by a person using a web browser and respond accordingly. When our visitor is a person using a web browser, respond as normal, but for a crawler, return a page optimized to show the crawler exactly what we want to, in a format the crawler can easily read.

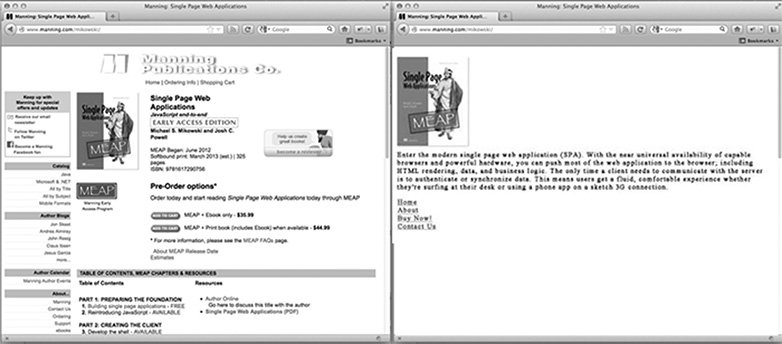

For the home page of our site, what does a crawler-optimized page look like? It's probably our logo or other primary image we'd like appearing in search results, some SEO optimized text explaining what the site is or does, and a list of HTML links to only those pages we want Google to index. What the page doesn't have is any CSS styling or complex HTML structure applied to it. Nor does it have any JavaScript, or links to areas of the site we don't want Google to index (like legal disclaimer pages or other pages we don't want people to enter through a Google search). The image below shows how a page might be presented to a browser (on the left) and to the crawler (on the right).

Customizing content for crawlers

Typically, single page sites link to different content using a hash bang (#!). These links aren't followed the same way by people and crawlers.

For instance, if in our single page site a link to the user page looks like /index.htm#!page=user:id,123, the crawler would see the #! and know to look for a web page with the URI /index.htm?_escaped_fragment_=page=user:id,123. Knowing that the crawler will follow the pattern and look for this URI, we can program the server to respond to that request with an HTML snapshot of the page that would normally be rendered by JavaScript in the browser.

That snapshot will be indexed by Google, but anyone clicking on our listing in Google search results will be taken to /index.htm#!page=user:id,123. The single page site JavaScript will take over from there and render the page as expected.

This provides single page site developers with the opportunity to tailor their site specifically for Google and specifically for users. Instead of having to write text that's both legible and attractive to a person and understandable by a crawler, pages can be optimized for each without worrying about the other. The crawler's path through our site can be controlled, allowing us to direct people from Google search results to a specific set of entrance pages. This will require more work on the part of the engineer to develop, but it can have big pay-offs in terms of search result position and customer retention.

Detecting Google's web crawler

At the time of this writing, the Googlebot announces itself as a crawler to the server by making requests with a user-agent string of Googlebot/2.1 (+http://www.googlebot.com/bot.html). A Node.js application can check for this user agent string in the middleware and send back the crawler-optimized home page if the user agent string matches. Otherwise, we can handle the request normally.

This arrangment seems like it would be complicated to test, since we don't own a Googlebot. However Google offers a service to do this for publicly available production websites as part of its Webmaster Tools, but an easier way to test is to spoof our user-agent string. This used to require some command-line hackery, but Chrome Developer Tools makes this as easy as clicking a button and checking a box:

-

Open the Chrome Developer Tools by clicking the button with three horizontal lines to the right of the Google Toolbar, and then selecting Tools from the menu and clicking on Developer Tools.

-

In the lower-right corner of the screen is a gears icon: click on that and see some advanced developer options such as disabling cache and turning on logging of XmlHttpRequests.

-

In the second tab, labelled Overrides, click the check box next to the User Agent label and select any number of user agents from the drop-down from Chrome, to Firefox, to IE, iPads, and more. The Googlebot agent isn’t a default option. In order to use it, select Other and copy and paste the user-agent string into the provided input.

-

Now that tab is spoofing itself as a Googlebot, and when we open any URI on our site, we should see the crawler page.

In Conclusion

Obviously, different applications will have different needs with regard to what to do with web crawlers, but always having one page returned to the Googlebot is probably not enough. We'll also need to decide what pages we want to expose and provide ways for our application to map the _escaped_fragment_=key=value URI to the content we want to show them.

You may want to get fancy and tie the server response in to the front-end framework, but I usually take the simpler approach here and create custom pages for the crawler and put them in a separate router file for crawlers.

There are also a lot more legitimate crawlers out there, so once we've adjusted our server for the Google crawler we can expand to include them as well.

Do you build single page sites? How do single page sites perform on search engines? Let us know your thoughts in the comments.

Featured image/thumbnail, search image via Shutterstock.